Azure Data Factory is a cloud-based data integration service that allows you to create data-driven workflows in the cloud for orchestrating and automating data movement and data transformation.

Azure Data Factory does not store any data itself. It allows you to create data-driven workflows to orchestrate the movement of data between supported data stores and processing of data using compute services in other regions or in an on-premise environment. It also allows you to monitor and manage workflows using both programmatic and UI mechanisms.

Why Azure Data Factory?

Azure Data Factory (ADF) is a service. It used to transform all their raw big data from relational, non-relational and other storage systems and integrate it for use with data-driven workflows to help companies map strategies, attain goals and drive business value from the data they possess.

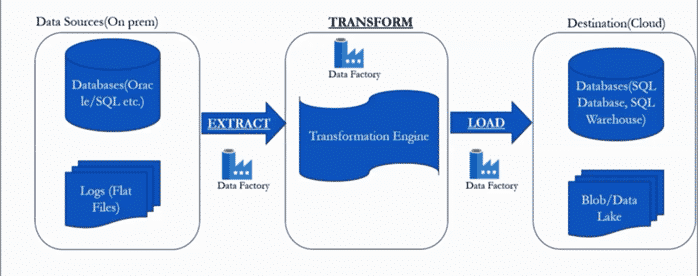

Let’s take an example, there is an organization who have lot of data on premise. The data has store across multiple databases like oracle, MYSQL, SQL server and also, there are flat file data like nothing but log files and auditing data. As an organization of rapidly expanding the data of volume and also increase exponentially. To meet the demand of exponentially data volume that organization decide that move to data centre all databases to cloud for get all the benefits of flexibility, scalability and show on.

To transfer or perform the data to cloud the organization has follow the process:

- The first phase is extracting the data where we connect to source and get all the data.

- The second phase is Transform the data. Once the data is extracted then we have to transform the data like data cleansing, apply all the business rules, data quality check and show on.

- Once the data is transformed then comes the final phase that is Loading the data. which is our data destination that is nothing but our Microsoft Azure cloud. Azure provide multiple ways of loading and storing the data like databases as SQL databases or SQL warehouse and unstructured data like log files.

How does Data Factory work?

The Data Factory service allows you to create data pipelines that move and transform data and then run the pipelines on a specified schedule (hourly, daily, weekly, etc.). This means the data that is consumed and produced by workflows is time-sliced data, and we can specify the pipeline mode as scheduled (once a day) or one time.

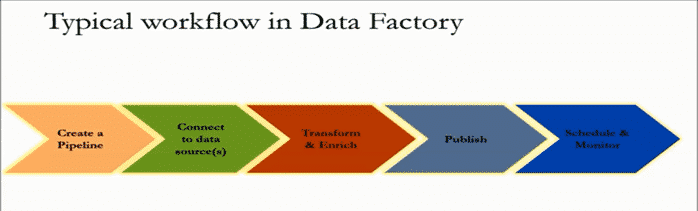

So, what is Azure Data Factory and how does it work? The pipelines (data-driven workflows) in Azure Data Factory typically perform the following three steps:

- Connect and Collect: Connect to all the required sources of data and processing such as SaaS services, file shares, FTP, and web services. Then, move the data as needed to a centralized location for subsequent processing by using the Copy Activity in a data pipeline to move data from both on-premise and cloud source data stores to a centralization data store in the cloud for further analysis.

- Transform and Enrich: Once data is present in a centralized data store in the cloud, it is transformed using compute services such as HDInsight Hadoop, Spark, Data Lake Analytics, and Machine Learning.

- Publish: Deliver transformed data from the cloud to on-premise sources like SQL Server or keep it in your cloud storage sources for consumption by BI and analytics tools and other applications.

Data migration activities with Data Factory

By using Data Factory, data migration occurs between two cloud data stores and between an on-premise data store and a cloud data store.

Copy Activity in Data Factory copies data from a source data store to a sink data store. Azure supports various data stores such as source or sinks data stores like Azure Blob storage, Azure Cosmos DB (Document DB API), Azure Data Lake Store, Oracle, Cassandra, etc. For more information about Data Factory supported data stores for data movement activities, refer to Azure documentation for Data movement activities.

Azure Data Factory supports transformation activities such as Hive, MapReduce, Spark, etc that can be added to pipelines either individually or chained with other activities. For more information about Data Factory supported data stores for data transformation activities, refer to the following Azure documentation: Transform data in Azure Data Factory.

If you want to move data to a data store that Copy Activity doesn’t support, you should use a .Net custom activity in Data Factory with your own logic for copying data. To learn more about creating and using a custom activity, see Use custom activities in an Azure Data Factory pipeline.